ParaPara: Synthesizing Pseudo-2.5D Content from Monocular Videos for Mixed Reality

Published in The 2018 CHI Conference on Human Factors in Computing Systems (ACM CHI 2018, Poster), 2018

Recommended citation: Dong-Hyun Hwang, and Hideki Koike. "Parapara: Synthesizing pseudo-2.5 d content from monocular videos for mixed reality." Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems. 2018. https://dl.acm.org/doi/abs/10.1145/3170427.3188596

Authors

Dong-Hyun Hwang and Hideki Koike

Tokyo Institute of Technology

Abstract

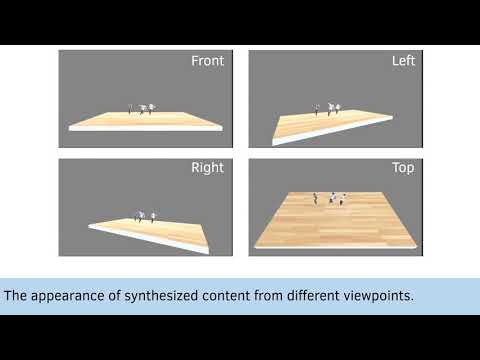

In this work, we propose a simple yet effective method for synthesizing a pseudo-2.5D scene from a monocular video for mixed reality (MR) content. We also propose the ParaPara system, which applies this method. Most previously proposed systems convert real-world objects into 3D graphic models using expensive equipment; this is a barrier for individuals or small groups to create MR content. ParaPara uses four points in an image and their manually estimated distances to synthesize MR content by applying deep neural networks and simple image processing techniques to monocular videos. The synthesized content can be observed through an MR head-mounted display, and spatial mapping and spatial sound are applied to support the interaction between the real-world and MR content. The proposed system is expected to reduce the entry barriers to create MR content because it can create such content from a large number of previously captured videos.