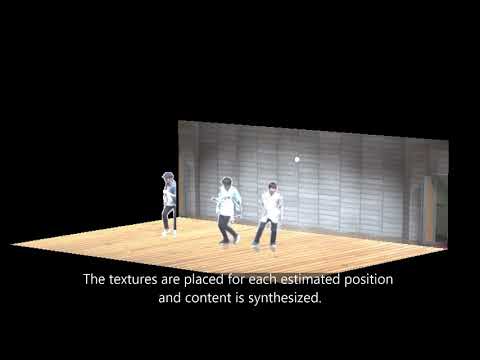

MonoMR: Synthesizing Pseudo-2.5D Mixed Reality Content from Monocular Videos

Published in Journal of Applied Sciences 2021, 11(17), 7946 (SCIE, IF: 2.679, Full paper), 2021

Recommended citation: Dong-Hyun Hwang and Hideki Koike. 2021. "MonoMR: Synthesizing Pseudo-2.5D Mixed Reality Content from Monocular Videos" Applied Sciences 11, no. 17: 7946. https://doi.org/10.3390/app11177946 https://www.mdpi.com/2076-3417/11/17/7946

Authors

Dong-Hyun Hwang and Hideki Koike

Tokyo Institute of Technology

Abstract

MonoMR is a system that synthesizes pseudo-2.5D content from monocular videos for mixed reality (MR) head-mounted displays (HMDs). Unlike conventional systems that require multiple cameras, the MonoMR system can be used by casual end-users to generate MR content from a single camera only. In order to synthesize the content, the system detects people in the video sequence via a deep neural network, and then the detected person’s pseudo-3D position is estimated by our proposed novel algorithm through a homography matrix. Finally, the person’s texture is extracted using a background subtraction algorithm and is placed on an estimated 3D position. The synthesized content can be played in MR HMD, and users can freely change their viewpoint and the content’s position. In order to evaluate the efficiency and interactive potential of MonoMR, we conducted performance evaluations and a user study with 12 participants. Moreover, we demonstrated the feasibility and usability of the MonoMR system to generate pseudo-2.5D content using three example application scenarios.